From Wikipedia, the free encyclopedia

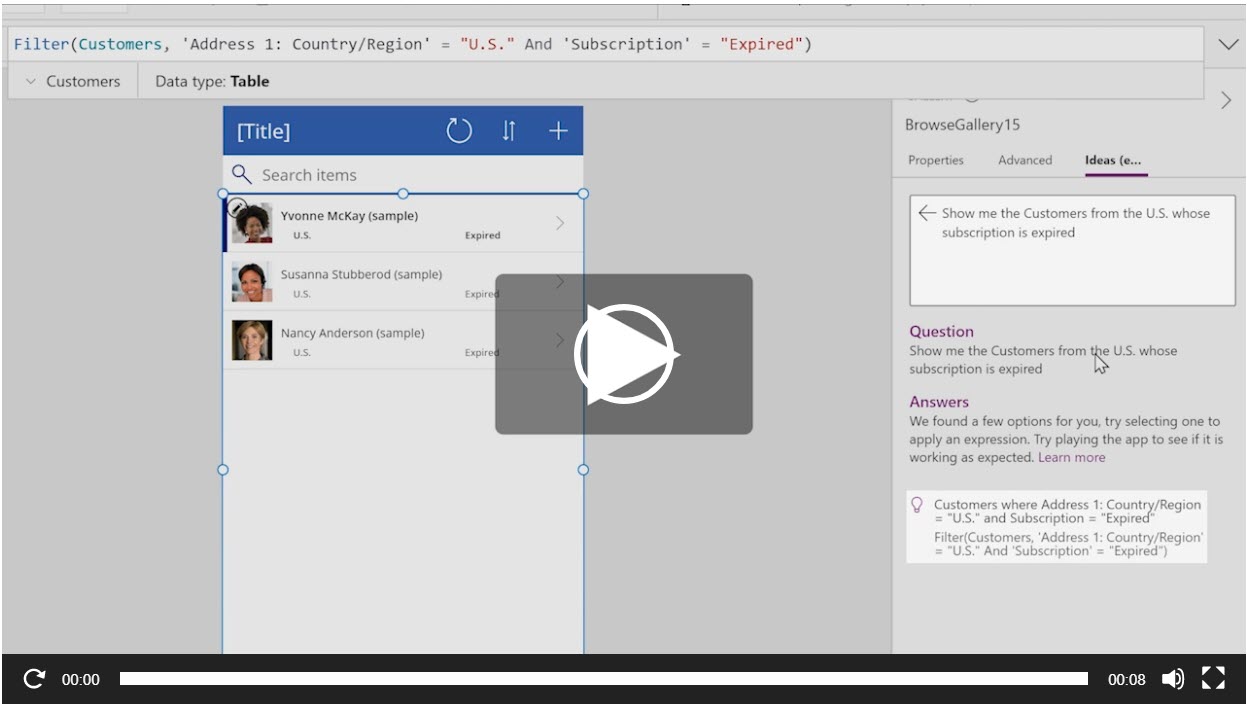

Generative Pre-trained Transformer 3 (GPT-3) is an autoregressive language model that uses deep learning to produce human-like text.

It is the third-generation language prediction model in the GPT-n series (and the successor to GPT-2) created by OpenAI, a San Francisco-based artificial intelligence research laboratory.[2] GPT-3's full version has a capacity of 175 billion machine learning parameters. GPT-3, which was introduced in May 2020, and was in beta testing as of July 2020,[3] is part of a trend in natural language processing (NLP) systems of pre-trained language representations.

This is a real example, see https://www.ambrogiorobot.com/en. Disclosure: LF owns one.

See https://en.wikipedia.org/wiki/ELIZA. A classic book still worth reading on the ELIZA effect and AI in general is (Weizenbaum 1976). In 2014 some people claimed, mistakenly, that a chatbot had passed the test. Its name is “Eugene Goostman”, and you can check it by yourself, by playing with it here: http://eugenegoostman.elasticbeanstalk.com/. When it was tested, I was one of the judges, and what I noticed was that it was some humans who failed to pass the test, asking the sort of questions that I have called here “irreversible”, such as (real examples, these were asked by a BBC journalist) “do you believe in God?” and “do you like ice-cream”. Even a simple machine tossing coins would “pass” that kind of test.

See for example the Winograd Schema Challenge (Levesque et al. 2012).

For an excellent, technical and critical analysis, see McAteer (https://matthewmcateer.me/blog/messing-with-gpt-3/ ." data-track="click" data-track-action="reference anchor" data-track-label="link" data-test="citation-ref" aria-label="Reference 2020">2020). About the “completely unrealistic expectations about what large-scale language models such as GPT-3 can do” see Yann LeCun (Vice President, Chief AI Scientist at Facebook App) here: https://www.facebook.com/yann.lecun/posts/10157253205637143.

The following note was written by the journalists, not the software: “[…] GPT-3 produced eight different outputs, or essays. Each was unique, interesting and advanced a different argument. The Guardian could have just run one of the essays in its entirety. However, we chose instead to pick the best parts of each, in order to capture the different styles and registers of the AI. Editing GPT-3’s op-ed was no different to editing a human op-ed. We cut lines and paragraphs, and rearranged the order of them in some places. Overall, it took less time to edit than many human op-eds.” (GPT-3 2020).

For some philosophical examples concerning GPT-3, see http://dailynous.com/2020/07/30/philosophers-gpt-3/.

For a more extended, and sometimes quite entertaining, analysis see (Lacker https://lacker.io/ai/2020/07/06/giving-gpt-3-a-turing-test.html ." data-track="click" data-track-action="reference anchor" data-track-label="link" data-test="citation-ref" aria-label="Reference 2020">2020).

For an interesting analysis see (Elkins and Chun 2020).